Evaluation Function/ Batch Test (new)

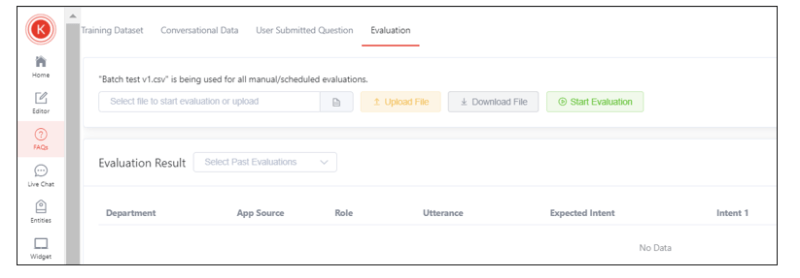

Explore our FAQ section and choose Evaluation to access our batch testing feature. Simplify your testing process with KeyReply's tools.

Along the side navigation bar, select “FAQ” and select “Evaluation” along the top bar.

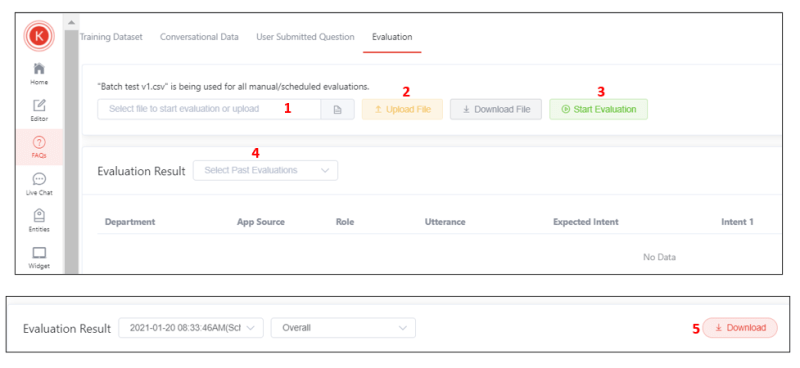

1. Upload a file for evaluation by clicking on

2. You can select the file to be uploaded and click on

to upload a file.

- The default file should be the test set file assigned to your model. Please refer to the next section for test set creation or maintenance.

- If there is currently an uploaded file, the above steps can be skipped.

- Do note that the new uploads will replace the existing file.

- To back up the current copy before replacing the file, click on the icon to download the existing file.

Please note that if you need to add or edit the uploaded file, you will need to download the current file, edit/add the content, and upload it back without changing the other contents.

3. Start on-demand evaluation by clicking on . Give it a few minutes to evaluate. Depending on the file size, the evaluation might take some time. Once the evaluation is done, the results will be generated in the panel below.

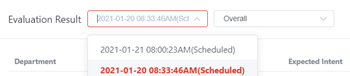

4. For scheduled evaluations, the past Scheduled Evaluation results can be selected from this dropdown list:

Note that On-Demand Evaluation results will not be stored.

5. You can export data by clicking on the

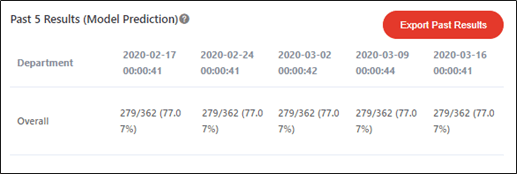

Comparing past results

For easy comparison, the 5 latest scheduled results will be detailed below the Accuracy Breakdown. You can export all the past results by clicking on button. This export will include all the past scheduled test results, not only limited to the 5 latest results.

Maintaining the test set

In machine learning models, a test set is used to test the trained model, thereby assessing the performance of your trained FAQ model.

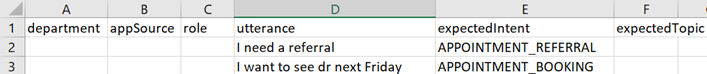

To upload the test set, please follow the structure and header row filled in as below, in CSV format:

- Column for the department: Fill in this column only if you are assigned to a department with a department ID given to you.

- Note: This is different from the handover live-chat department.

- Column for appSource: Fill in this column only if your chatbot has multiple entry points and the appSource ID has been given to you.

- Column for the role: Fill up this column only if your chatbot has a role function, and should be filled with the role ID provided.

- Column for the utterance: This column is compulsory. Fill up this column with the test set questions.

- Column for the expectedIntent: This column is compulsory. Fill up this column with the expected correct intent for the corresponding utterance.

- Column for the expectedTopic: Fill in this column only if you have the FAQ Topic Bot feature turned on. This column should be filled with the userQueryTopic or the title of each FAQ topic.

When should you maintain the test set?

- Generally, there is no need to maintain the test set so that we can compare the absolute difference between each test.

- You should update the test set when a new intent is added, so that the test coverage would show the performance of that intent as well.

- When you change the intent name on FAQ training dataset, do update the intent name on the test set as well.

- When an intent has been removed or disabled, please remove the intent along with its set of examples from the test set.

Important notes:

- Remember that only CSV file can be uploaded, hence please save your test set in CSV format.

- DO NOT train the test set questions as your training dataset. Otherwise, it will be like students had a round of revision of the exam questions before sitting for the exam, defeating the purpose of sitting through the exam/test.